Applications of machine learning have grown exponentially over the past few years and with it, the possibilities of malicious attacks targeting it through any vulnerabilities present.

The rise in threats has prompted OWASP to release a list of vulnerabilities that would affect it. This article will discuss the OWASP machine learning Top 10 in detail.

What is OWASP Machine Learning Top 10?

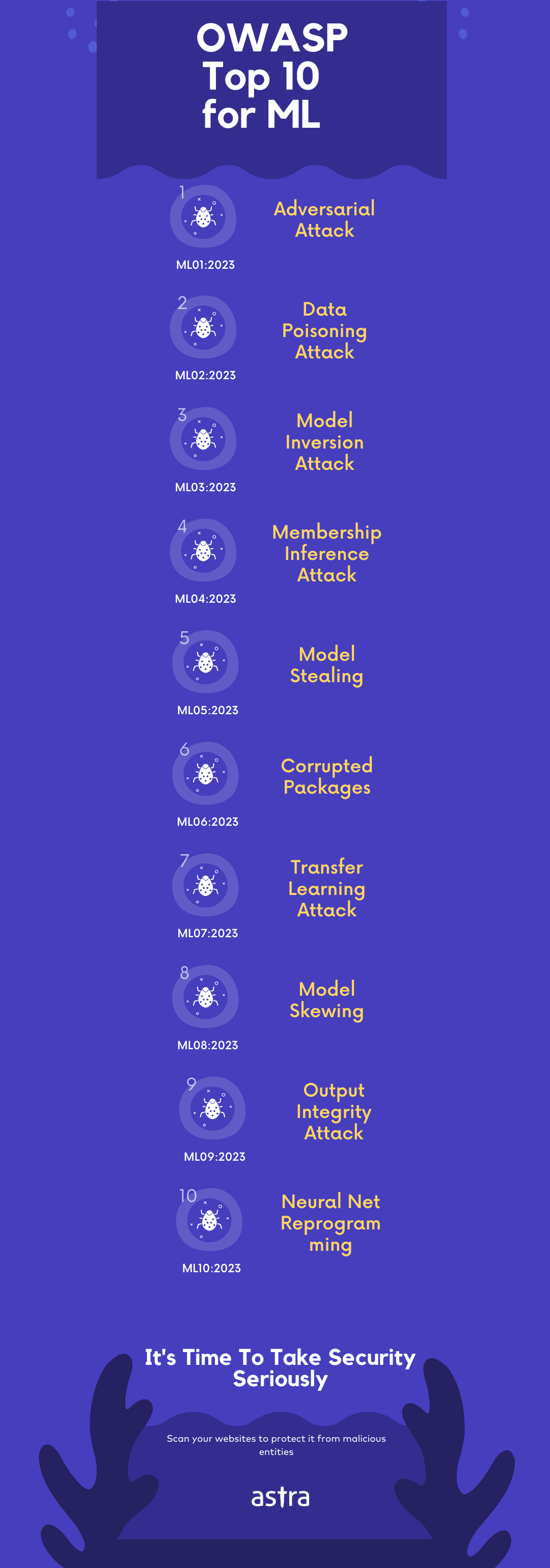

OWASP Machine Learning Top 10 is a list of vulnerabilities that could pose a huge risk if present. The list was introduced with the goal of educating developers, and organizations about the potential threats that may arise in ML. The OWASP Top 10 for machine learning includes:

- Adversarial Attack

- Data Poisoning Attack

- Model Inversion Attack

- Membership Inference Attack

- Model Stealing

- Corrupted Packages

- Transfer Learning Attack

- Model Skewing

- Output Integrity Attack

- Neural Net Reprogramming

OWASP Machine Learning Security Top 10 In Detail

The above-mentioned list of Top 10 OWASP machine learning security vulnerabilities will be explained in further detail in this section.

ML01-2023: Adversarial Attack

This vulnerability refers to an attack where the attacker deliberately alters the input data to misdirect the machine learning model. This can be done through two methods namely, image classification and network intrusion.

Image Classification

In this method of adversarial attack, the machine learning model is tricked into misclassifying a particular image due to small perturbations created within the image by the attacker. When deployed, this can cause the ML to bypass or harm the system entirely.

Network Intrusion

A machine learning model is taught how to detect intrusions within a network. However, in this attack, adversarial network traffic is carefully created by the attacker to bypass or evade the model’s intrusion detection system. This allows the manipulation of IP addresses and payload leading to serious consequences such as system compromise or data theft.

ML02-2023: Data Poisoning Attack

Such an attack occurs when training data for the machine-learning model is manipulated so that the model behaves in an undesirable manner. The attack can be achieved in two ways.

Training of a Spam Classifier

Here, the training data that helps the model classify between spam and not spam emails are poisoned or manipulated. This is done by injecting maliciously labeled spam emails into the training data by hacking or exploiting a vulnerability in the software or network. It can also be done by mislabeling the training data.

Training of Network Traffic Classification System

The training data for the network traffic classification system in the machine learning model is poisoned through incorrect labeling. As a result, the model misallocates network traffic to the wrong categories.

ML03-2023: Model Inversion Attack

The machine learning training model is rever engineered in this scenario to extract information from it. Two of the possible ways such an attack can be executed are through stealing personal information and bypassing a bot detection model.

Stealing Personal Information

This attack is executed by training a model for face recognition and then using that model to invert the predictions of another face recognition model. This is done by exploiting vulnerabilities within the model implementation or API with which the attacker can recover personal information.

ML04-2023: Membership Inference Attack

In such an attack the training data used for the machine-learning model is manipulated by the attacker in a manner that results in the exposure of sensitive data. An attack like this is carried out if the ML system does not have proper access controls, data encryption, or backup and recovery techniques.

Inference attacks result in incorrect model predictions, loss of sensitive data, compliance violations, and damage to reputation. Such an attack can be carried out to infer the financial history and sensitive information of individuals from financial record datasets.

ML05-2023: Model Stealing

In this attack, the attacker tries to gain access to the ML model’s access parameters. It mostly occurs due to unsecured model parameters making it easier for an attacker to access and manipulate the parameters to expose and steal information.

With such an attack, the confidentiality of the model and the reputation of the organization that made the machine-learning model would be severely affected. Lack of encryption, insufficient monitoring, and auditing are some reasons that pave the way for such an attack.

ML06-2023: Corrupted Packages

Corrupted packages occur when an attacker modifies or replaces an ML library or model that is used by the system. This usually occurs due to reliance on untrustworthy third-party code or due to heavy reliance on open-source packages that can be modified to affect the system upon downloading.

Such an attack has a tendency to go unnoticed for a long period compromising the machine learning process and harming the organization through modification of results, or data theft.

ML07-2023: Transfer Learning Attack

This type of attack occurs when the attacker trains a model for one task and then fine-tunes it to have an undesirable effect on another task. It usually occurs due to a lack of proper data protection measures and insecure data storage making it susceptible to manipulation.

The attack produces misleading and incorrect results from the ML system causing identity manipulation, confidentiality breach, reputational harm, and regulatory issues for the company.

ML08-2023: Model Skewing

Here, an attacker manipulates the distribution training data causing the machine learning system to behave in a harmful manner. This usually occurs due to data bias, incorrect data sampling, or through manipulation of data sets by the attacker.

Model skewing attacks can cause significant damage to the system such as biased output resulting in incorrect decisions, financial loss, and damage to reputation. Such erroneous results can also harm individuals through medical diagnoses and criminal justice.

ML09-2023: Output Integrity Attack

In an output integrity attack, the attacker modifies or manipulates the output of the machine learning system, causing it to change its behavior and harming the system that the ML is used in. ML models are susceptible to such an attack when there is a lack of proper authentication and authorization measures, and insufficient validation, verification, and monitoring of data sets.

A successful output integrity attack results in a loss of confidence in the ML model and its predictions, financial loss, damage to the reputation of the company, and even security risks.

ML10-2023: Neural Net Reprogramming

Here, the parameters of the machine learning model are manipulated causing the system to behave in an undesirable way. The system is susceptible to the attack due to insufficient access controls, insecure coding practices, and inadequate monitoring of the model’s activity.

It can result in the manipulation of the model’s results and predictions to obtain desired results. Extraction of confidential information, biased decisions based on inaccurate model predictions, and damage to the credibility and reputation of the organizations are a few other consequences of neural net reprogramming.

How To Prevent OWASP Machine Learning Top 10?

Here are some of the ways suggested by OWASP to stay ahead of the pesky vulnerabilities mentioned above.

Robust Models

This refers to using models that are designed to be sturdy against adversarial attacks through training, retraining, and providing adversarial model training to reduce the chances of being successfully attacked and increase the response period of its defense mechanisms.

Data Validation And Verification

Ensure that the training data fed to the machine learning model is thoroughly validated and verified before it is used for model training. Data validation checks and multiple data labelers should be employed to ensure the accuracy of the data that is labeled. Data validation can be subcategorized into input validation and model validation.

Input Validation

Data validation can is subcategorized to input validation. Here the input is analyzed for any anomalies like unexpected values, patterns, or detection and rejection of likely malicious inputs. It is also prudent to check certain parameters of the input such as consistency, format and range before it is processed.

Model Validation

Validate the machine learning model using a separate validation that has not been used in training to detect any data poisoning that might have affected the data for training.

Data Safety

Here are some of the ways data safety can be ensured.

Secure Data Storage

Use encryption, data transfer protocols, and firewalls to store the training data in a safe manner to ensure that it is not tampered with in any way.

Data Separation

Separate the training data from the data that is produced to reduce the exposure and chances of data compromise.

Access Control

Access control should be implemented to restrict access to the training data.

Encryption

The model’s code, training, and retraining data sets, and any other sensitive information should have the appropriate data encryption measures in place to prevent attackers from accessing and stealing confidential assets.

Monitoring And Auditing

Monitoring and auditing the data regularly and during specific time intervals can help in the detection of anomalies and or data tampering. Models should also be tested regularly for any abnormalities to prevent attacks such as inference attacks. Auditing can be done through OWASP penetration testing.

Anomaly Detection

Anomaly detection methods can be used to detect any abnormal behavior in the training data such as changes in data distribution or labeling and help in early data poisoning detection.

Model Training

This can be classified into adversarial training and retraining.

Adversarial Training

Adversarial training refers to training in the deep learning model based on adversarial examples. This helps the machine learning model to detect adversarial attacks robustly and deploy the appropriate security measures within time. It also reduces the chances of the ML being misled by an adversarial attack.

Model Retraining

Here, the machine learning model is regularly retrained to ensure that the system remains up to date thus reducing the chances of information leakage from model inversion. The data set used for retraining would have new data and correction of inaccuracies if any, in the model’s current predictions.

Legal Protection

Ensure that the ML model is adequately protected in terms of legality with respect to patents, and trade secrets thus making it difficult for attackers to steal or use the model code, and sensitive data and even provide a solid basis for legal action in case of theft.

Conclusion

This article has detailed the top risks and attacks that threaten the security and integrity of machine learning models today based on OWASP Machine Learning (ML) Top 10. The OWASP ML Top 10 was introduced to raise awareness of the issues that plague ML models so that developers and organizations can be aware of it. The precautionary measures mentioned can go a long way in helping avoid this issues for a secure, bias free ML model.