Large language models have gained immense popularity among web users today owing to the generation of human-like text responses. However, as with any technology, LLM is not without its risks and safety issues.

This article addresses the top 10 types of vulnerabilities for large language models as assigned by OWASP.

What Is OWASP Top 10 for Large Language Model?

OWASP Large Language Model (LLM) Top 10 refers to the top 10 types of vulnerabilities that can plague LLMs. The first version of this vulnerability list was introduced by OWASP recently with the aim of educating developers, designers, and organizations as a whole about potential security risks that may arise from the deployment of Large Language Models.

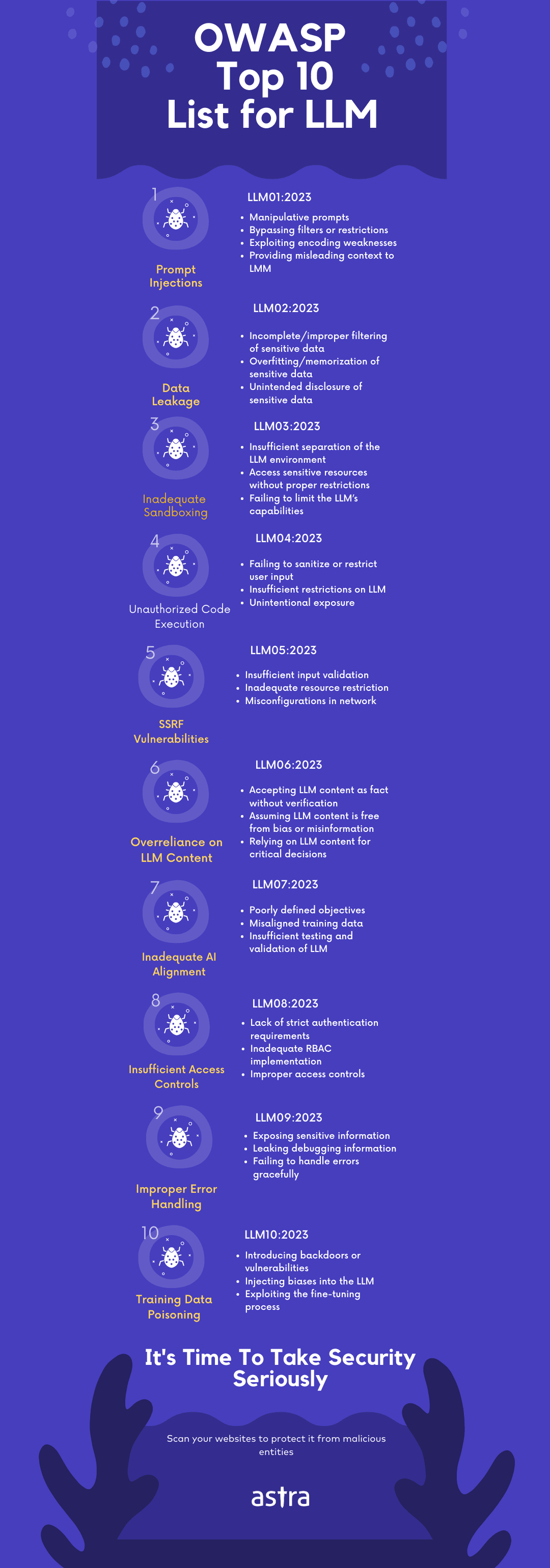

OWASP Top 10 for large language models in brief include:

- Prompt Injections

- Data Leakage

- Inadequate Sandboxing

- Unauthorized Code Execution

- SSRF Vulnerabilities

- Over-Reliance on LLM Content

- Inadequate AI Alignment

- Insufficient Access Controls

- Improper Error Handling

- Data Poisoning

Each of the mentioned vulnerability types will be discussed in greater detail further in the coming sections.

What Is OWASP Top 10?

OWASP Top 10 started out initially as a list of critical web application vulnerabilities that was provided by the Open Web Application Security Project or OWASP in 2003.

OWASP is a non-profit organization that works to improve the security of software through its community-led open-source software projects, hundreds of chapters worldwide, tens of thousands of members, and by hosting local and global conferences.

OWASP Top 10 has now expanded to include various technologies with critical vulnerability lists for IoT, mobile applications, machine learning, and among them, large language models.

What Is A Large Language Model?

Large Language Model is an artificial intelligence language or algorithm that employs deep learning strategies to analyze vast amounts of information and learn patterns and structures of languages.

LLM then uses this information to respond to text-based queries through the generation of responses that resemble human-generated content. They can answer questions, write essays, articles, and letters to other language-oriented tasks.

It gathers relevant information from a large and extensive data set which includes books, articles, websites, and other resources. These models have the statistical ability to comprehend word meanings, and sentence relations and can even capture the subtle nuances of a language. A prime example of this is chatGPT.

OWASP Large Language Model (LLM) Top 10 in Detail

This section will detail OWASP’s Top 10 types of vulnerabilities for large language models according to its very first version 0.1.0.

Prompt Injections

Carefully crafted prompts allow the bypassing of filters and the manipulation of LLM to ignore instructions or perform unintended actions. Consequences of such a vulnerability include data leakage, security breaches, and unauthorized access.

This vulnerability is accessed through prompt manipulation to reveal sensitive information, usage of specific language patterns to bypass security restrictions, and misleading of LLM to perform unintended actions.

For example, an attacker could craft a prompt that tricks an LLM resulting in gaining access to sensitive information like user creds, system or application details, and more.

Data Leakage

The vulnerability results in the accidental revealing of sensitive data like proprietary plans, algorithms, or other confidential information like usernames and passwords and more through the responses of the LLM.

It mostly results in unauthorized access, privacy violations, and other forms of security breaches that could affect intellectual property.

Common vulnerabilities under this category include incomplete or improper filtering of sensitive data in LLM’s response, memorization of sensitive data in LLM’s learning process, and lastly unintentional disclosure of private data due to errors.

Examples of this category of attacks include LLM revealing sensitive information in response to a user’s query or when LLM lacking proper output filters creates responses containing confidential data.

Inadequate Sandboxing

Vulnerabilities within this category arise when not enough measures are in place to properly isolate the large language model from the access they have to external resources or confidential systems there potentiating the chances of exploitation and unauthorized access.

Common vulnerabilities within inadequate sandboxing include an insufficient separation and too much between LLM and its sensitive resources, and lastly, failure to limit the functionality of LLM thus allowing interactions with other processes.

Examples of a sandboxing vulnerability would include the crafting of a prompt that is designed to trick the LLM into revealing sensitive information or through the execution of unauthorized commands.

Unauthorized code execution refers to the exploitation of LLMs using malicious commands and codes through natural language prompts that target the underlying system.

Common vulnerabilities under this category include failure to restrict user input resulting in prompts successful in the execution of unauthorized code, insufficient restrictions resulting in unwanted LLM interactions, and unintentional exposure of underlying systems to LLMs.

An example of such an attack is if an LLM has unwanted and undetected interactions with system API which results in unauthorized command executions through LLM.

SSRF Vulnerabilities

Server-Side Request Forgery vulnerabilities arise through the exploitation of LLMs to perform unintended tasks, and access restricted resources such as API or data stores.

Major vulnerabilities under this category include insufficient input validation, inadequate resource restrictions, and network misconfigurations. These vulnerabilities can result in the LLM initiating unauthorized prompts, interacting with internal services, and exposing internal sources to the LLM.

Some examples of SSRF vulnerabilities include crafting of prompt that requests LLM make an internal service request bypassing any present access controls to gain access to sensitive information.

Over-Reliance on LLM Content

The next type of vulnerability arises from the over-dependence LLM raised content without human insight which can have harmful consequences. It can lead to the propagation of incorrect and or misleading information and decreased human decision-making and critical thinking.

These vulnerabilities arise when organizations and users trust the LLM output without any verification which can lead to errors and miscommunication.

Common issues that arise from LLM over-dependency include acceptance of LLM-generated content as factful, bias-free, and good for critical thinking without verifying its credibility with human insight.

Examples include the generation of news content with AI or LLM on a wide variety of topics thus perpetuating misinformation.

Inadequate AI Alignment

Such vulnerabilities arise when there is a failure in the alignment of LLM’s objectives and behavior with its intended use. A vulnerability like this can lead to unseen consequences resulting in harmful behavior.

Common AI alignment issues include poorly defined LLM objectives, improperly aligned training data, and insufficient testing. Such issues result in unintended model behavior, resulting in the spread of misinformation.

An example of such an issue would be if an LLM designed to help with system administration is misaligned resulting in the execution of harmful commands that effects performance or security of the system.

Insufficient Access Controls

This ranges of issues arise from the improper implementation of access controls or authentication thus allowing unauthenticated users to access and interact with the LLM to exploit vulnerabilities.

Major issues that stem from insufficient access controls include failure to enforce strict authentication requirements, inadequate role-based access control (RBAC), and failure to provide proper access controls.

Not having adequate RBAC for example would result in users having far more access to resources and actions than their intended permissions.

Improper Error Handling

This vulnerability results in the exposure of error messages or debugging information revealing sensitive data, application details, or even potential attack vectors.

Common issues include the exposure of sensitive information through error messages, the leaking of debugging information, and the failure to handle error messages gracefully. Such vulnerabilities can cause the system to behave erratically or even crash.

An attacker trying to use error messages to gather sensitive details that could be used for a targeted attack is a prime example of improper error handling.

Data Poisoning

This type of vulnerability starts early from the learning stage of LLM where maliciously manipulated data is produced to introduce vulnerabilities or even backdoors within the LLM.

This could compromise the model’s security, effectiveness, and ethical behavior. Common issues include the introduction of backdoors or vulnerabilities into the LLM, injecting biases, and exploitation of the fine-tuning process.

Such data poisoning results in manipulated training data that could be biased or give inappropriate responses.

Goal Of OWASP Top 10 for LLM

The major goal of setting an OWASP Top 10 vulnerabilities list for large language models is to educate developers, architects, managers, and everyone involved within an organization on the potential risks associated with the deployment of an LLM.

The main aim of OWASP was to create a vulnerability list that was based on potential impact, ease of exploitation, and chances of occurrence in real-life applications.

Additionally, the software security community wanted to raise awareness of these vulnerabilities, suggest remediation strategies, and ultimately improve the security posture of LLM applications.

Other Tools Available to Identify LLM Risks

Besides OWASP Top 10 LLM, here are some other tools and frameworks that aid in the identification and resolution of risks in LLM.

GuardRails

Guardrails is a tool that allows the creation of programmable rules that govern the interaction between users and the AI application. It supports LangChain, a toolkit collection including templates that bind AI, API, and LLMs with other software. Guardrails act as an additional layer of security to this.

Hierarchical levels of artificial neural networks

Using the hierarchical levels of artificial neural networks, machine learning algorithms are enabled to perform feature extraction and transformation which can help in the detection and mitigation of vulnerabilities and other risks in large language models.

Conclusion

OWASP Top 10 LLM enables individuals and organizations involved with large language models to detect, gauge, and mitigate vulnerabilities and other security issues that may arise by keeping an eye out for them.

These risks and vulnerabilities can compromise the integrity of the LLM in question and therefore it is highly critical that be identified and resolved as soon as possible. With the ever-rapid pace of AI development, it won’t come as a surprise if the OWASP Top 10 LLM changes soon, but for now this version does a neat job helping developers and organizations be aware.